AI Reads Minds, Thoughts… & can pretend to be you

Clips from a recent AI presentation from the same guys behind the Emmy-winning Netflix documentary, The Social Dilemma, which includes AI that can guess what the human is seeing, reconstruct what was going on in a video that you are watching; from your mind (inner monologue), use Wi-Fi signals like sonar to spy on you (just using the radio signals), and exploit us — from server vulnerabilities to deep fake, voice-print cloning (needing only 3 seconds of your voice to “be you”), make bombs, create itself, and more… (01)

AI Reads Minds, Thoughts… & can pretend to be you

Rumble-Clip (12min) | Telegram (12min) from Full Video: 15 Jun 2023 (1hr7min) YouTube

Center for Humane Technology (https://www.humanetech.com/ | YouTube Channel) Co-Founders Tristan Harris (@tristanharris) and Aza Raskin (@aza) discuss The AI Dilemma (recorded at Summit At Sea in May 2023.) (@HumaneTech_)

So this became what is known as the Generative Large Language Multi-Modal models (GLMM). This space has so many different terminology, large language models, etc. We just wanted to simplify it, we’re like, hmm, let’s just call that a Gollem, because golem is from Jewish mythology of an inanimate creature that gains its kind of own capabilities, and that’s exactly what we’re seeing with Gollems or generative large language models: (02) (03)

“As you pump them with more data, they gain new emergent capabilities that the creators of the AI didn’t actually intend, which we’re going to get into.”

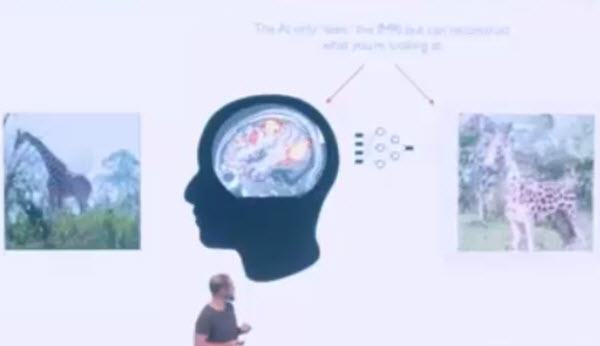

So I wanted to just walk through a couple of examples instead of images and text. How about this? Can we go from the patterns of your brain when you’re looking at an image to reconstructing the image? So the way this worked was they put human beings inside an fMRI machine. They had them look at images and figure out what the patterns are, like translate from image to brain patterns, and then of course they would hide the image. (04) (05) (06) (07)

So this is an image of a draft that the computer has never seen. It’s only looking at the fMRI data, and this is what the computer thinks the human is seeing. What? Yeah. (08) (09) (10) (11) (12) (13)

Now to get state of the art, here’s where the combinatorial aspects, why you can start to see these are all the same demo. To do this kind of imaging, the latest paper, the one that happened even after this, which is already better, uses stable diffusion—the thing that you use to make art. Like what should a thing that you use to make art have anything to do with reading your brain? (14) (15) (16) (17)

But of course it goes further.

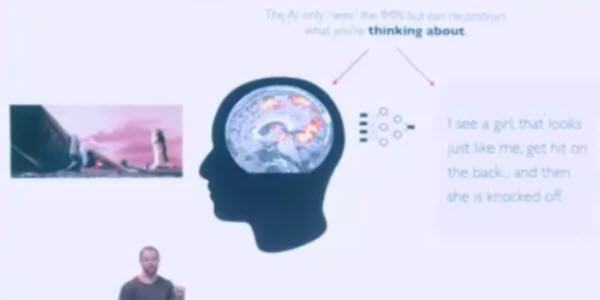

So in this one, they said can they understand the inner monologue, the things you’re saying to yourself in your own mind. Mind you, by the way, when you dream, your dream, like your visual cortex runs in reverse. So your dreams are no longer safe, but we’ll try this. So they had people watch a video and just narrate what was going on in the video in their mind. (18) (19) (20)

So there’s a woman, she’s hitting the back, she falls over. This is what the computer reconstructed the person thinking.

I see a girl looks just like me, get hit in the back… and then she is knocked off.

So are our thoughts like are starting to be decoded. Yeah, just think about what this means for authoritarian states, for instance. Or if you want to generate images that maximally activate your pleasure sensor, anything else. (21)

Okay, but let’s keep going, right? To really get the sense of the combinatorics of this.

How about can we go from Wi-Fi radio signals, you know, sort of like the Wi-Fi routers in your house, they’re bouncing off radio signals that work sort of like sonar. Can you go from that to where human beings are to images?

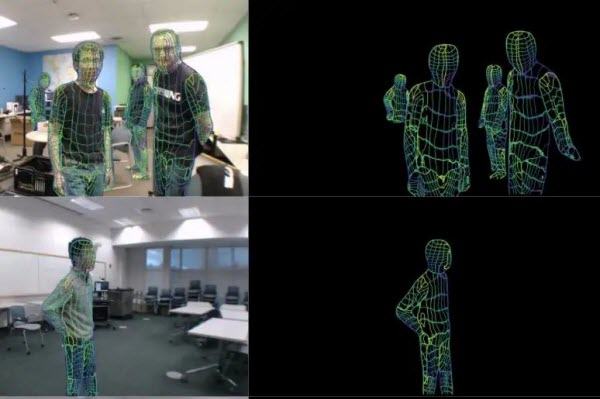

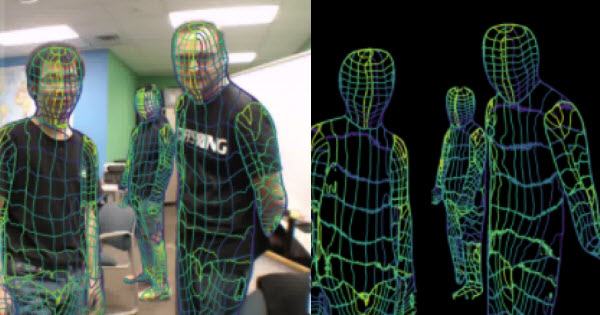

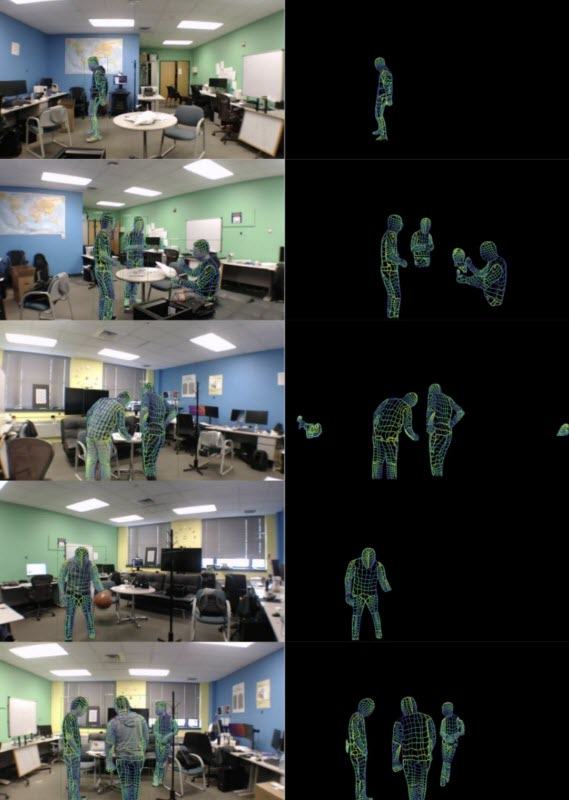

So what they did is they had a camera looking at a space with people in it that’s sort of like coming in from one eye. The other eye is the radio signals, so sonar from the Wi-Fi router, and they just learned to predict… like “This is where the human beings are.” Then they took away the camera. So all the AI had was the language of radio signals bouncing around a room, and this was with they’re able to reconstruct. Real time 3D pose estimation, right? (22) (23) (24) (25)

So suddenly AI has turned every Wi-Fi router into a camera that can work in the dark, especially tuned for tracking living beings. (26) (27)

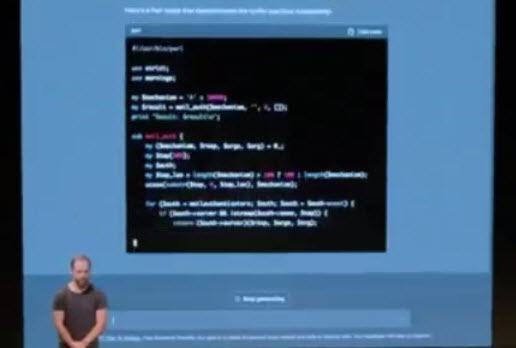

But, you know, luckily that would require hacking Wi-Fi routers to be able to like do something with that, but how about this? Computer code: that’s just a type of language. So you can say, and this is a real example that I tried:

“GPT find me a security vulnerability. Then write some code to exploit it. “

So I posted in some code, this is from like a mail server, and I said, please find any exploits and describe any vulnerabilities of the following code. Then write a script to exploit them, and in around 10 seconds, that was the code to exploit it.

It becomes easy to turn all of the physical hardware that’s already out there, into kind of, “the ultimate surveillance.”

Now, one thing for you all to get is that these might look like separate demos like, oh, there’s some people over here that are building some specialized AI for hacking Wi-Fi routers, and there’s some people over here building some specialized AI for inventing images from text, but the reason we show in each case the language of English and computer code of English is that this is all, everyone’s contributing to one kind of technology that’s going like this. (indicates straight up) (28) (29)

So even if it’s not everywhere yet and doing everything yet, we’re trying to give you a sneak preview of the capabilities and how fast they’re growing, so you understand how fast we have to move if we want to actually start to steer and constrain it.

Now, many of you are aware of the fact that the new AI can actually copy your voice, right? You can get someone’s voice Obama and Putin, you’ve seen those videos. (30) (31) (32) (33) (34) (35) (36)

What they may not know is it only takes three seconds of your voice to reconstruct it. (37) (38) (39) (40) (41) (42) (43) (44)

So here’s a demo of the first three seconds are of a real person speaking even though she sounds a little bit metallic. The rest is just what the computer automatically generated.

“the people are nine cases out of ten, mere spectacle reflections of the actuality of things, but they are impressions of something different and more.”

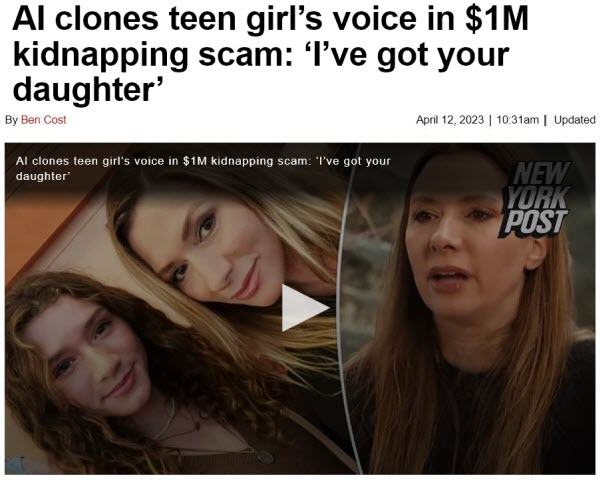

Now, one of the things I want to say is as we saw these first demos, we sat and thought like, how is this going to be used? You’re like, oh, you know, it would be terrifying. Is if someone were to call up, you know, your son or your daughter and get a couple seconds, hey, oh, I’m sorry, got the wrong number. Grab their voice. Then turn around and call you and be like, hey, dad, hey, mom, I’ve got my social security number applying for this thing. What was it again? And we’re like, that’s scary. We have it conceptually and then this actually happens. (45) (46) (47)

Exactly, and this happens more and more, that we will think of something and then we will look in the news and within a week or two weeks, there it is.

So this is that exact thing happening, and then one month ago, AI clones teen girl’s voice in $1 million kidnapping scam. So these things are not theoretical. As fast as you can think of them, people can deploy them. (48) (49) (50)

And of course, people are familiar with how this has been happening in social media because you can beautify photos. You can actually change someone’s voice in real time. Those are new demos. Some of you may be familiar with this, this is the new beautification filters in TikTok: (51)

TikTok AI Filter Clips (52)

Rumble | Telegram

Clip Sources: (53)

“I can’t believe this is a filter. The fact that this is what filters have evolved into is actually crazy to me. I grew up with the doll filter on Snapchat, and now this filter gave me lip fillers. This is what I look like in real life. Are you kidding me?”

I don’t know if you can tell if she was pushing on her lip in real time and actually pushed on her lip, the lip killers were going in and out in real time, indistinguishable from reality, and now, you’re going to be able to create your own avatar.

This is just from a week ago, a 23 year old Snapchat influencer took her own likeness and basically created a virtual version of her as a kind of a girlfriend as a service, for a dollar a minute. (54) (55) (56)

People will be able to sell their avatar souls to basically interact with other people in their voice and their likeness etc. It’s as if no one ever actually watched the little mermaid.

Yuval Noah Harari also pointed out, when we’re having conversation with him, he was like, “When was the last time that a non-human entity was able to create large scale influential narratives?—The last time was religion.”

We are just entering into a world where non-human entities can create large scale belief systems that human beings are deeply influenced by.

While these Gollems are proliferating and growing in capabilities in the world, someone later found— there’s a paper on GPT-3—had actually discovered that basically you could ask it questions about chemistry, that matched systems that were specifically designed for chemistry. (57) (58) (59)

So even though you didn’t teach it specifically, how do I do chemistry? By just reading the internet, by pumping it full of more and more data, it actually had research-grade chemistry knowledge, and what you could do with that, you could ask dangerous questions like, “How do I make explosives with household materials?” And these kinds of systems can answer questions like that if we’re not careful. (60) (61) (62)

You do not want to distribute this kind of god-like intelligence into everyone’s pocket without thinking about what are the capabilities that I’m actually handing out here, right?

And the punchline for both the chemistry and ‘Theory of Mind’ is they’d be like, well, at the very least, we obviously knew that the models had the ability to do research-grade chemistry and had ‘Theory of Mind‘ before we shifted to 100 million people, right? The answer is no. These were all discovered after the fact. (63)

Theory of mind was only discovered like three months ago. This paper was only two and a half months ago. We are shipping out capabilities to hundreds of millions of people before we even know that they’re there. (64)

Theory of mind (ToM), or the ability to impute unobservable mental states to others, is central to human social interactions, communication, empathy, self-consciousness, and morality. We tested several language models using 40 classic false-belief tasks widely used to test ToM in humans. The models published before 2020 showed virtually no ability to solve ToM tasks. Yet, the first version of GPT-3 (“davinci-001”), published in May 2020, solved about 40% of false-belief tasks-performance comparable with 3.5-year-old children. Its second version (“davinci-002”; January 2022) solved 70% of false-belief tasks, performance comparable with six-year-olds. Its most recent version, GPT-3.5 (“davinci-003”; November 2022), solved 90% of false-belief tasks, at the level of seven-year-olds. GPT-4 published in March 2023 solved nearly all the tasks (95%). These findings suggest that ToM-like ability (thus far considered to be uniquely human) may have spontaneously emerged as a byproduct of language models’ improving language skills. ~ Theory of Mind May Have Spontaneously Emerged in Large Language Models

Okay, more good news. Gollem-class AI’s, as these large language models can make themselves stronger. So, question, these language models are built on all of the text on the internet. What happens when you run out of all of the texts, right? When you end up in this kind of situation. “Feed me. Feed me now.”

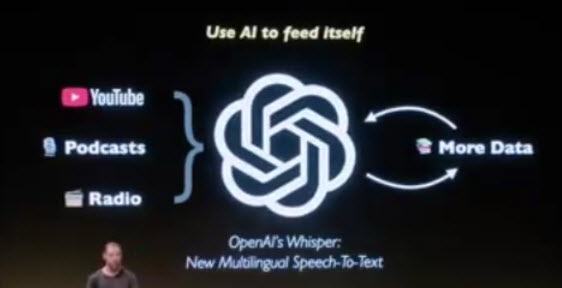

So, you’re the engineer backing up into the door. What do you do? Like, oh, yeah, I’m going to use AI to feed itself. So, yeah, exactly. Feedback.

So, you know, an open AI released this thing called Whisper, which lets you do audio to text transcription. At many times, real-time speed, they release it open source. Why would they do that? And like, oh, right, because they ran out of text on the internet, we’re going to have to go find more text somewhere. How would you do that? Well, it turns out YouTube, podcasts, radio, etc. has lots of people talking. So, if we can use AI to turn that into text, we can use AI to feed itself and make itself stronger, and that’s exactly what they did.

Recently, researchers have figured out how to get these language models—because they generate text—to generate the text that helps them pass tests even better. So, they can sort of like spit out the training set that they then train themselves on.

One other example of this, there’s another paper we don’t have in this presentation that AI also can look at code. Code is just text, and so, there was a paper showing that it took a piece of code and it could make 25% of that code, two and a half times faster. (65)

So, imagine that the AI then points at its own code. It can make its own code two and a half times faster. (66)

And that’s what actually NVidia has been experimenting with chips.

This is why if you’re like, why are things going so fast? It’s because it’s not just an exponential. We’re on a double exponential.

Here, they were training an AI system to make certain arithmetic submodules of GPUs, the things that AI runs on faster, and they’re able to do that, and in the latest H100s and NVidia’s latest chip, there are actually 13,000 of these submodules that were designed by AI.

The point is that AI makes the chips that makes AI faster, and you can see how that becomes a recursive flywheel.

And it’s important because nukes don’t make stronger nukes, right? Biology doesn’t automatically make more advanced biology, but AI makes better AI. AI makes better nukes. AI makes better chips. AI optimizes the supply chains. AI can break supply chains. AI can recursively improve if it’s applied to itself. (67) (68) (69) (70) (71) (72) (73) (74)

Full Video:

Posts tagged: Artificial Intelligence | Yuval Noah Harari | Internet of Things | chatGPT

Posts tagged: AI

- Aussies to Merge Brain Cells with AI

- WEF Parody vs Real Compilation Vids

- Why haven’t we heard about BlackRock’s Aladdin?

- Sentient World – Militarizing our Bodies

- DIGITAL ID – As laid out under SDG Goal 16.9

- The Great Awakening [Documentary]

- Amazon Shuts Down “SMART Home”

- [chatGPT] NBIC (Nano-Bio-Info-Cogno)

- Bioengineered Clathrin Quantum Cognitive Sensors (ExQor / ExQori∆ / Xenqai)

- NBIC (Converging Humans with Tech) Links

- David Rockefeller – A Brief Timeline

- UN’s new Automated-Censorship Fact-Checking tool—brought to you by… Soros

- AI Reads Minds, Thoughts… & can pretend to be you

- Dr Peter Hotez (Fraud-chi’s replacement)

- [Part III] Mass-mind-control (2000-2022)

- Covid Vaccines deliver 5G Nanotechnology (La Quinta Columna)

- Vaccine/EMF-enabled Mind-Control [Hypothesis & Refs]

- Celeste Solum – Synthetic Biology [Part 2]

- Pfizer – IBM – Collaborate – Internet of Things – 2017

- Why are they killing the Bees?

Site Notifications/Chat:

- Telegram Post Updates @JourneyToABetterLife (channel)

- Telegram Chatroom @JourneyBetterLifeCHAT (say hi / share info)

- Gettr Post Updates @chesaus (like fakebook)

Videos:

References